In recent experiments, we were able to show a 40-gram pocket drone flying inside an indoor environment, autonomously, using nothing but a 4-gram stereo-camera. With a new computer vision method, called Edge-FS, the pocket drone can detect obstacles with stereo-vision and calculate its own velocity in real-time, all on-board a STM32F4 microprocessor (168 MHz,196 Kb). This work has been featured in the recently published issue of IEEE Robotics and Automation Letters.

We chose a light-weight stereo-camera as the pocket drone’s main driving sensor, as many variables in the surrounding environment can be detected with computer vision. Many of the current algorithms are too complex to run at high enough frame rates on the microprocessor on-board our pocket drones. In similar research, we developed an efficient stereo-vision algorithm for the DelFly Explorer which enabled it to autonomously avoid obstacles. Unlike the DelFly, however, the pocket drone has unstable flight characteristics and requires velocity stabilization to maintain hover. To get an estimate of the ego-motion of the pocket drone we use opticflow.

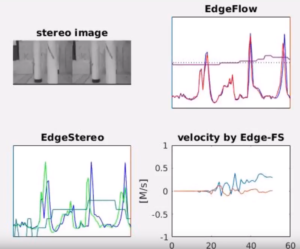

We developed an extremely efficient computer vision algorithm able to calculate both stereo-vision and its own velocity simultaneously, called Edge-FS (flow + stereo). It uses edge-distributions, which is a very compressed version of the low resolution image that comes from the stereo camera. On a STM32F4 microprocessor, it only needs 17.5 ms of computation time, allowing the velocity estimation to run 25Hz, the full frame rate of our cameras.

We programmed the pocket to react to the obstacles detected by Edge-Stereo, while controlling is velocity as calculated by Edge-Flow. With these simple principles, it could fly autonomously within an office-like indoor environment. For more information, please view the following youtube video or take a look at the youtube playlist to check out all the experiments. More general information on ongoing research can be found here.

References

K. McGuire, G. de Croon, K. Tuyls, and B. Kappen, “Efficient optical flow and stereo vision for velocity estimation and obstacle avoidance on an autonomous pocket drone,” IEEE Robotics and Automation Letters, vol. 2, iss. 2, pp. 1070-1076, 2017.

PDF: https://ieeexplore.ieee.org/iel7/7083369/7339444/07833065.pdf

Matlab Code: https://github.com/knmcguire/EdgeFlow_matlab

3 Replies to “Towards an autonomous pocket drone for indoor navigation”

Comments are closed.