TU Delft scientists have uncovered how deep neural networks see distances in a single image. They report on their findings in an article on the public Arxiv repository (link: https://arxiv.org/pdf/1905.07005.pdf).

Obstacles higher in the image are seen as further away

Scientists Tom van Dijk and Guido de Croon show that deep neural networks trained on images made by self-driving cars, mostly rely on the position of objects on the road in the image. Objects that are positioned higher in the image, are seen as further away. The neural network determines the image position by looking at contrasts with the road surface; When there is insufficient contrast, current distance estimation networks can even completely miss objects on the road.

The findings in the article are important for evaluating how well the networks will generalize to different real-world conditions. Moreover, they can provide guidance in the better training of these networks, for their reliable application in autonomous robots.

Deep neural networks as key technology for autonomous robots

Deep neural networks are an enabling technology for autonomous robots such as self-driving cars. Over the past decade, deep neural networks have achieved human-competitive and even super-human performance on visual tasks such as traffic sign recognition. Recently, there has been an enormous progress in how well deep neural networks can judge distances based on a single image. The attractive characteristic of these networks is that they can learn to see distances without any human supervision or labelling.

Understanding how these distance estimation deep nets work is paramount for determining their reliability. Psychological research has shown that humans use visual cues such as the image size of known objects, the fact that closer objects hide parts of further objects, and the position of an object in the image. In principle, these visual cues are also available to deep neural networks, but it is currently not known what cues are actually exploited by the networks.

Understanding deep neural networks by adopting methods from psychology

It is notoriously hard to analyze the function of the millions of neurons and connections in deep neural networks. To circumvent this issue, Van Dijk en De Croon have studied the networks in a way that is not dissimilar from psychological experiments with humans. They treated the deep neural networks as a black box and subjected them to various tests with manipulated images.

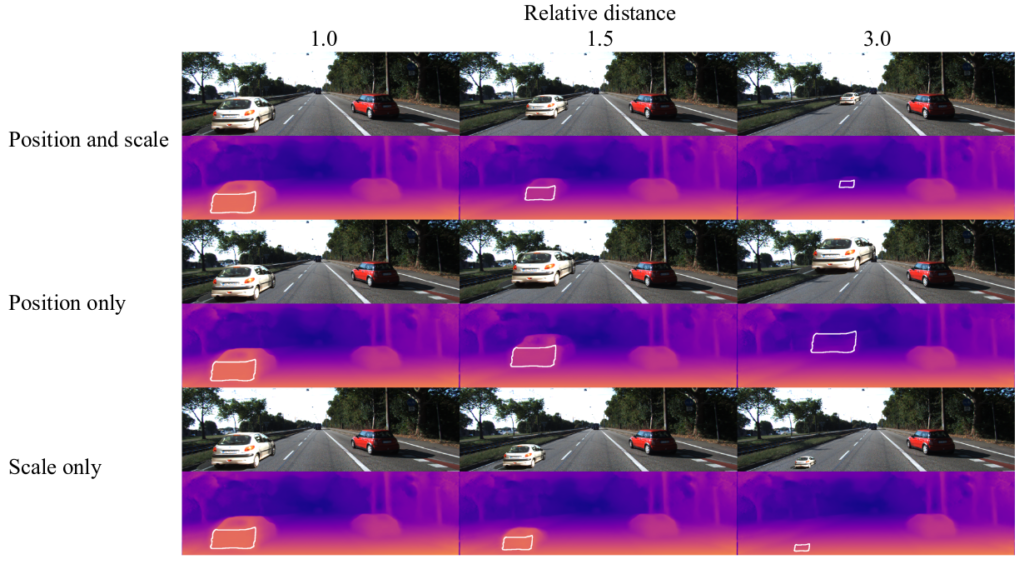

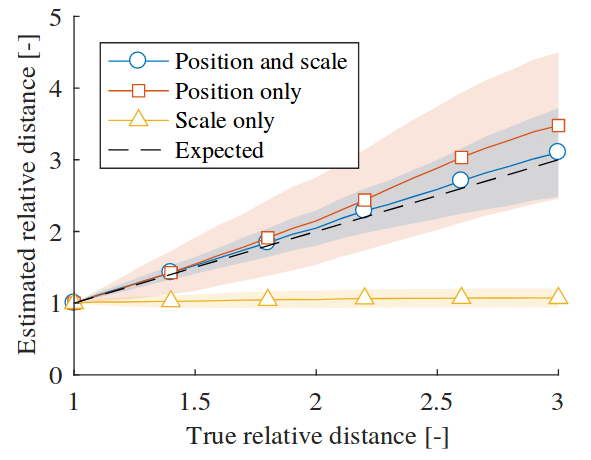

One of the central tests in the study involved inserting cars on an empty part of the road, while manipulating the car size and position on the road. “A car that is higher up in the image, is seen as further away by the deep net, while making the car bigger or smaller has no effect on the estimated distance”, says Tom van Dijk. “The intriguing thing is that we only realized very late in the research that we ourselves actually have a similar impression: a down-scaled car low in the image gives us the impression of a remote-controlled car toy driving close to us on the highway.”

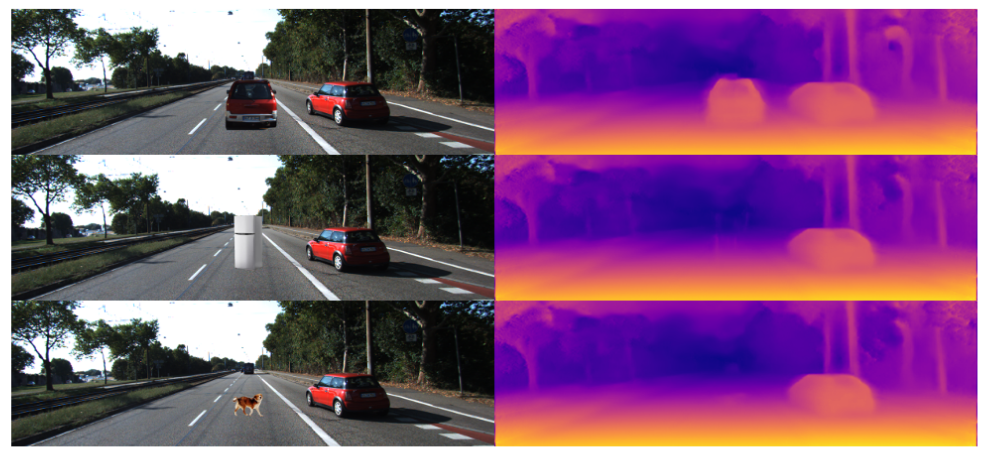

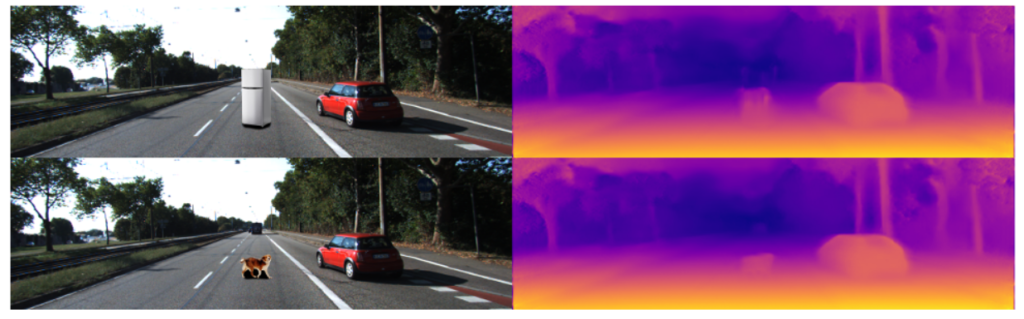

Not detecting a large white fridge

The findings in the study allow to better understand whether the self-learning networks will generalize to unseen situations. “In principle, object position is a reliable visual cue that does not depend on specific objects present in the training set”, says Guido de Croon. “Hence, we were surprised that the network did not see a large white fridge that we copy-pasted onto the road. It turned out that our copy-pasting did not create enough of a contrast with the road surface for the network to detect it. Hence, this finding suggests that the training set should include low-contrast conditions.”

De Croon also emphasizes that the impact of the research may go beyond that of distance estimation alone. “Deep neural networks are now performing many visual tasks that have been studied thoroughly on humans, so I think that we can enrich our toolbox for understanding deep neural networks by looking better at the methods used in psychological studies.”