We introduce a novel obstacle avoidance pipeline named Memory-Augmented Varying-speed Reinforcement Learning (MAVRL). MAVRL utilizes depth maps, along with the drone and target’s states as inputs, and generates acceleration commands. Following the acceleration generation, model predictive control (MPC) from Agilicious is employed to derive body rates and thrust commands for the drone.

🌍 Why Does This Matter?

Our research represents a significant step forward in the field of robotics and autonomous navigation. MAVRL’s adaptive capabilities and memory-enhanced learning open new possibilities for drones in critical applications such as search and rescue, environmental monitoring, and delivery services.

🚁 The Challenge

Autonomous drones often rely on obstacle avoidance algorithms that use a fixed flight speed. While effective in simple scenarios, this approach fails to balance agility and safety when environments become more complex. Additionally, many learning-based systems lack the ability to keep past observations, leading drones to get “stuck” in challenging situations.

🔬What Did We Do?

To address these limitations, we introduce MAVRL, a novel reinforcement learning pipeline. Here’s how it works:

- Adaptive Speed Policy: MAVRL dynamically adjusts flight speed based on the environment’s complexity, allowing for faster movement in open areas and careful navigation in cluttered ones.

- Memory-Augmented Latent Space: We designed a latent representation that explicitly retains memory of past depth observations, enabling the drone to anticipate obstacles outside its immediate field of view.

- Seamless Real-World Deployment: After minimal fine-tuning, MAVRL was successfully deployed on a real drone, demonstrating its practicality in real-world scenarios.

🔍 Highlights from Our Experiments

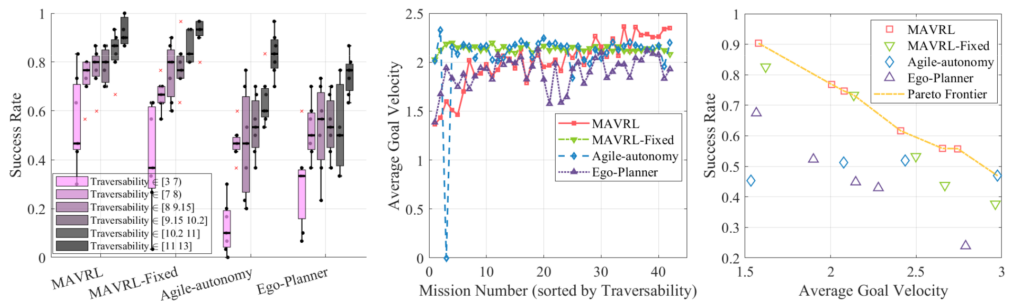

- Simulation Results: MAVRL consistently demonstrated superior navigation performance, achieving higher success rates and more efficient obstacle avoidance compared to baseline methods.

- Benchmarking: Extensive tests showed that MAVRL’s adaptive speed policy not only improved navigation efficiency but also created a Pareto frontier for balancing speed and safety.

- Real-World Deployment: Our drone navigated cluttered indoor and outdoor environments effectively, proving the robustness of MAVRL under real-world conditions.

📄 Learn More

- Paper link: MAVRL

- Code link: https://github.com/tudelft/mavrl.git