Inspired by how ants visually recognize their environment and count their steps to navigate back home, MAVLab researchers have developed an insect-inspired navigation strategy for small, lightweight robots. This innovative approach allows these robots to return home after long journeys with minimal computation and memory requirements (1.16 kB per 100 meters). The research, published in Science Robotics on July 17, 2024, opens new possibilities for applications like warehouse inventory monitoring and industrial gas leak detection.

Navigational Challenges and Innovations

Tiny robots, weighing between tens to a few hundred grams, present unique opportunities for real-world applications due to their light weight, safety, and ability to maneuver in narrow spaces. However, their small size limits their computational and memory resources, complicating autonomous navigation.

Traditional autonomous navigation systems rely on heavy, power-intensive sensors or external infrastructure like GPS and wireless beacons, which are unsuitable for tiny robots. GPS is often inaccurate in cluttered environments and unusable indoors, while maintaining beacons can be costly or impractical.

This is why some researchers have turned to nature for inspiration. Insects are especially interesting as they operate over distances that could be relevant to many real-world applications, while using very scarce sensing and computing resources.

Bio-inspired Navigation Strategy

Biologists have an increasing understanding of the underlying strategies used by insects. Specifically, insects combine keeping track of their own motion (termed “odometry”) with visually guided behaviors based on their low-resolution, but almost omnidirectional visual system (termed “view memory”). Whereas odometry is increasingly well understood even up to the neuronal level, the precise mechanisms underlying view memory are still less well understood. Hence, multiple competing theories exist on how insects use vision for navigation. One of the earliest theories proposes a “snapshot” model. In this model, an insect such as an ant is proposed to occasionally make snapshots of its environment. Later, when arriving close to the snapshot, the insect can compare its current visual percept to the snapshot, and move to minimize the differences. This allows the insect to navigate, or ‘home’, to the snapshot location, removing any drift that inevitably builds up when only performing odometry.

“Snapshot-based navigation can be compared to how Hansel tried not to get lost in the fairy tale of Hansel and Gretel. When Hans threw stones on the ground, he could get back home. In our case, the stones are the snapshots.” explained by Tom van Dijk, first author of the study, “As with a stone, for a snapshot to work, the robot has to be close enough to the snapshot location. If the visual surroundings get too different from that at the snapshot location, the robot may move in the wrong direction and never get back anymore. Hence, one has to use enough snapshots – or in the case of Hansel drop a sufficient number of stones. On the other hand, dropping stones to close to each other would deplete Hans’ stones too quickly. In the case of a robot, using too many snapshots leads to large memory consumption. Previous works in this field typically had the snapshots very close together, so that the robot could first visually home to one snapshot and then to the next.”

“The main insight underlying our strategy is that you can space snapshots much further apart, if the robot travels between snapshots based on odometry.”, says Guido de Croon, Full Professor in bio-inspired drones and co-author of the article, “Homing will work as long as the robot ends up close enough to the snapshot location, i.e., as long as the robot’s odometry drift falls within the snapshot’s catchment area. This also allows the robot to travel much further, as the robot flies much slower when homing to a snapshot than when flying from one snapshot to the next based on odometry.”

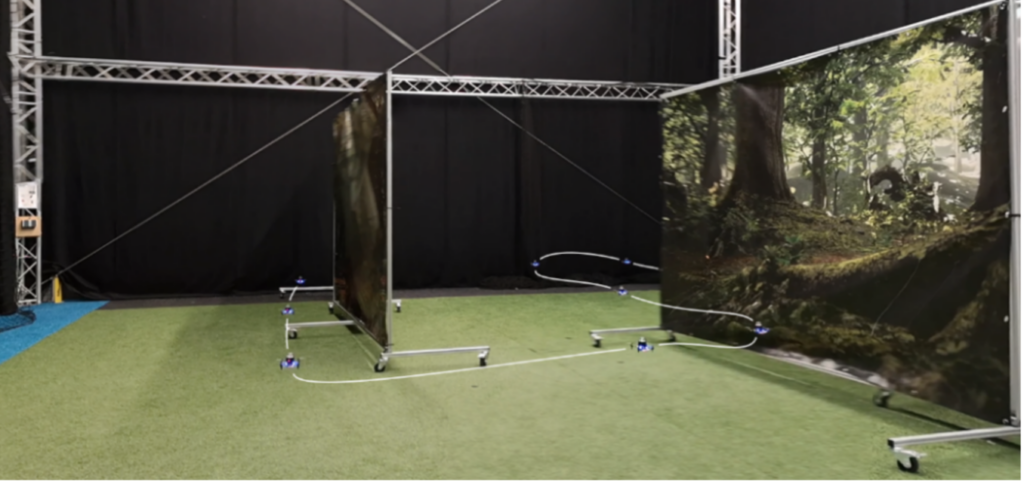

The proposed insect-inspired navigation strategy allowed a 56-gram “CrazyFlie” drone, equipped with an omnidirectional camera, to cover distances of up to 100 meters with only 1.16 kiloByte. All visual processing happened on a tiny computer called a “micro-controller”, which can be found in many cheap electronic devices.

Putting the robot technology to work

“The proposed insect-inspired navigation strategy is an important step on the way to applying tiny autonomous robots in the real world.”, says Guido de Croon, “The functionality of the proposed strategy is more limited than that provided by state-of-the-art navigation methods. It does not generate a map and only allows the robot to come back to the starting point. Still, for many applications this may be more than enough. For instance, for stock tracking in warehouses or crop monitoring in greenhouses, drones could fly out, gather data and then return to the base station. They could store mission-relevant images on a small SD card for post-processing by a server. But they would not need them for navigation itself.”

Media: https://surfdrive.surf.nl/files/index.php/s/aNel46A2cHBqGLS

2024 |

Visual Route-following for Tiny Autonomous Robots Journal Article In: Science Robotics, vol. 9, no. 92, pp. eadk0310, 2024, ISSN: 2470-9476. |