During an experiment performed on board of the International Space Station (ISS) a small drone successfully learned by itself to see distances using only one eye, reported scientists at the 67th International Astronautical Congress (IAC) in Guadalajara, Mexico. Although humans effortlessly estimate distances with one eye, it is not clear how we learn this capability, nor how robots should learn the same. The experiment was designed in collaboration between the Advanced Concepts Team (ACT) of the European Space Agency (ESA), the Massachusetts Institute of Technology (MIT) and the Micro Air Vehicles lab (MAV-lab) of Delft University of Technology (TU Delft), and was the final step of a five-years research effort aimed at in-orbit testing of advanced artificial intelligence (AI) concepts.

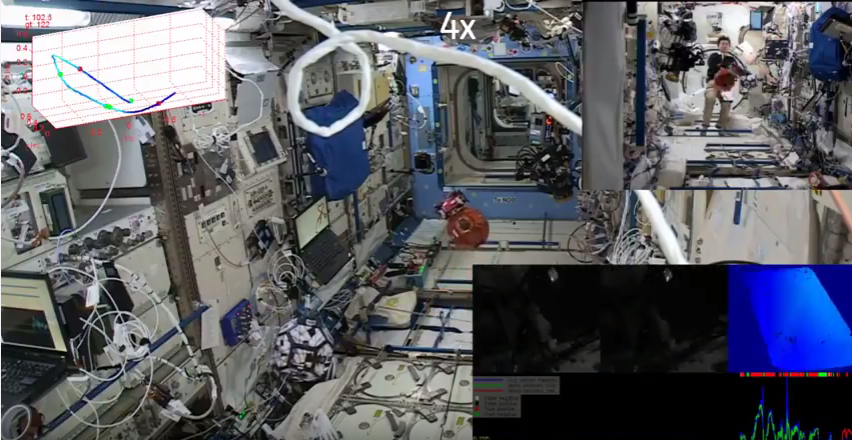

The paper, titled “Self-supervised learning as an enabling technology for future space exploration robots: ISS experiments”, decribes how during the experiment, a drone started navigating in the ISS while recording stereo vision information on its surroundings from its two ‘eyes’ (cameras). It then started to learn about the distances to walls and obstacles encountered so that when the stereo vision camera would be switched off, it could start an autonomous exploratory behaviour using only one ‘eye’ (a single camera).

While humans can close one eye and still be able to tell whether a particular object is far, in robotics many would consider it as being extremely hard. “It is a mathematical impossibility to extract distances to objects from one single image, as long as one has not experienced the objects before” says Guido de Croon from Delft University of Technology and one of the principal investigators of the experiment. “But once we recognise something to be a car, we know its physical characteristics and we may use that information to estimate its distance from us. A similar logic is what we wanted the drones to learn during the experiments”. Only, in an environment with no gravity, where no particular direction is favourite and thus had also to overcome this difficulty.

The self-supervised learning algorithm developed and used during the in-orbit experiment was thoroughly tested at the TU Delft CyberZoo on quadrotors, proving its value and robustness.

“It was very exciting to see, for the first time, a drone in space learning using cutting edge AI methods”, added Dario Izzo who coordinated the scientific contribution from ESA’s Advanced Concepts Team. “At ESA, and in particular here at the ACT, we worked towards this goal for the past 5 years. In space applications, machine learning is not considered as a reliable approach to autonomy: a ‘bad’ learning result may result in a catastrophic failure of the entire mission. Our approach, based on the self-supervised learning paradigm, has a high degree of reliability and helps the drone autonomy: a similar learning algorithm was successfully applied to self-driving cars, a task where reliability is also of paramount importance.”

The little drone that successfully learned “to see” was one of the SPHERES (Synchronized Position Hold Engage and Reorient Experimental Satellite) drones on board the ISS. The SPHERES are capable of rotation and translation in all directions. Twelve carbon dioxide thrusters are used for control and propulsion, and allow the satellites to manoeuvre with great precision in the zero gravity environment of the station. The MIT Space Systems Laboratory, in conjunction with NASA, DARPA, and Aurora Flight Sciences, developed and operates the SPHERES system to provide a safe and reusable zero gravity platform to test sensor, control and autonomy technologies for use in satellites. Developing these technologies is an enabler for new types of satellite systems.

The drone experiments on earth were performed by Kevin van Hecke for his MSc thesis. He also went to the MIT Space Systems Lab to translate the drone programs to the software required by the SPHERES: “It was my life-long dream to work on space technology, but that I would contribute to a learning robot in space even exceeds my wildest dreams!”.

Indeed, the experiment seems to hold promise for the future: “This is a further step in our quest for truly autonomous space systems, increasingly needed for deep space exploration, complex operations, for reducing costs, and increasing capabilities and science opportunities”, comments Leopold Summerer, head of ESA’s Advanced Concepts Team.

People Involved:

TU Delft MAV-Lab: Guido de Croon, Laurens van der Maaten, Kevin van Hecke.

Massachusetts Institute of Technology: Timothy P. Setterfield, Alvar Saenz-Otero.

Advanced Concepts Team: Dario Izzo, Daniel Hennes.

18 Replies to “Drone learns “to see” in zero-gravity”

Comments are closed.